1.3. What Exactly is AI? Models and Categories

Hou I (Esther) Lau

At its core, Artificial Intelligence (AI) refers to the simulation of human intelligence in machines. Machines are built to mimic cognitive functions like learning, reasoning, perceiving, or even creating. But there are many layers to AI.

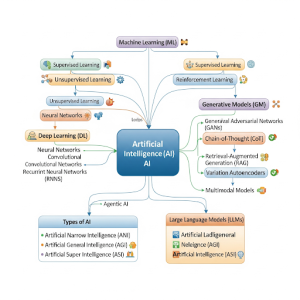

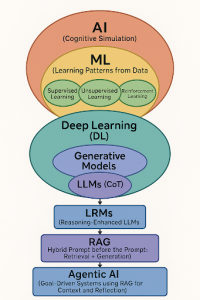

At the top, we have AI as the broadest concept. Within AI, we find Machine Learning (ML). This is the engine that powers most of today’s AI tools, focused on finding patterns in data. Next, we move into Deep Learning (DL), a type of ML that uses layered neural networks, inspired loosely by the structure of the human brain.

Deep Learning has made Generative Models possible. These are the models that can create new content. Within this, we find Large Language Models (LLMs), like ChatGPT or Gemini. These models use techniques like Retrieval-Augmented Generation (RAG), which combines what the model knows with real-time information retrieval. From there, we reach Multimodal Models (MCM), which can process multiple types of inputs (images, video, audio, text).

Finally, we encounter Agentic AI. These systems are goal-oriented and adaptive, combining reasoning, retrieval, and planning to carry out multi-step tasks autonomously.

Key Definitions

- Artificial Intelligence (AI): The simulation of human intelligence in machines, including learning, reasoning, perception, and creation.

- Machine Learning (ML): A subset of AI where systems learn patterns from data rather than following only hand-written rules. Major families include:

- Supervised learning: training with labeled examples.

- Unsupervised learning: finding structure without labels.

- Reinforcement learning (RL): learning by trial, error, and rewards.

- Deep Learning (DL): A form of ML that uses multi-layer neural networks to recognize complex patterns in text, images, audio, or video.

Models You Will See

- Generative models: produce new content such as text, images, or audio.

- Large Language Models (LLMs): deep learning models trained on large text corpora that predict likely next tokens to generate language.

- Retrieval-Augmented Generation (RAG): combines a generator with a search step so outputs can cite or draw from external sources.

- Multimodal models: accept and generate more than one modality, such as text with images or audio.

- Agentic systems: goal-directed setups that plan steps, call tools, and reflect before answering.

Scope Terms

- Artificial Narrow Intelligence (ANI). This is the AI we live with today. It is task-specific. Think about facial recognition on your phone, or a spam filter in your email. These systems are very good at one thing. But they cannot transfer that knowledge to other domains. For example, your Roomba cannot learn to play chess, even if it vacuums really well.

- Artificial General Intelligence (AGI). This is the hypothetical future where AI can think and reason across domains, just like humans. AGI would be able to learn a language, solve math problems, empathize in conversations, and maybe even cook dinner, all without being explicitly programmed to do each task separately. We are not there yet. And depending on who you ask, we may be decades away… or just a few years.

- Artificial Superintelligence (ASI). This one is more philosophical. ASI refers to machines that surpass human intelligence in every possible way. It would not only solve problems faster, it might solve problems we cannot even imagine. But it also raises important questions: Can it be aligned with human values? And what happens if it cannot?

AI Controls and Feedback

- Temperature: a setting (0-1) that affects the randomness of AI output. Lower values produce more predictable text. Higher values explore more diverse, creative options.

- Reinforcement Learning with Human Feedback (RLHF): a training step that aligns model behavior to human preferences.

Quick Self-Check: Core Concepts

Answer five quick questions to test your understanding of the distinctions above.

Common Misconceptions (click arrows to expand)

“LLMs look up answers verbatim.”

- LLMs generate text by predicting probable next tokens. They do not search a database by default. Retrieval-augmented setups can add search when needed.

“Deep learning equals understanding.”

- Models can match and recombine patterns very well. That does not imply human-style comprehension or intent.

“Generative models always create factual content.”

- Generation can be persuasive without being correct. That is why verification and retrieval are useful in professional contexts.

What AI Is Not

After surveying the landscape of AI, from rule-based systems to agentic models, it can be tempting to think that machines are basically human now. They are not. To use these tools wisely, we need to separate hype from reality. Modern AI systems can produce convincing text, images, and code, but they do not think, feel, or understand in human terms.

Why does AI seem intelligent? The paradox is that AI looks smart not because it understands, but because it is fast and vast. It processes enormous datasets, recognizes statistical patterns, and generates fluent responses in milliseconds. What creates the illusion of intelligence is speed, scale, and mimicry.

Core truth: AI predicts; it does not comprehend. Large Language Models (LLMs) such as ChatGPT, Gemini, or Claude are essentially next-word prediction systems. They generate statistically likely sequences based on patterns in training data, not on meaning.

- AI does not know what it is saying.

- AI does not understand human emotions.

- AI does not intend to be insightful, funny, or creative.

How does AI “read”? Not as humans do. It does not memorize sentences or pages. Instead, it encodes text into numerical vectors that capture relationships between words and concepts. When prompted to summarize a novel or explain a theory, it reconstructs a likely answer from these patterns, rather than recalling content directly.

Extensions and limits: Some newer models use techniques such as Retrieval-Augmented Generation (RAG), which pulls information from live sources, or Chain of Thought (CoT), which encourages step-by-step reasoning. Yet even with these additions, the foundation remains the same: AI predicts patterns. The outputs can be impressive, but they are not the product of human-like understanding.

📚 Weekly Reflection Journal

In your discipline, where could a narrow AI help with one specific task, and where would you still rely on human judgment? Note one example for each.

Apply the Terms

Complete a short cloze activity to reinforce the definitions using the exact terms above.

Looking Ahead

Next we will compare learning in minds and machines, then examine the mechanisms that power modern systems. That will prepare us to make informed choices about when and how to use AI in teaching and professional work.